Multimodal AI Voice Assistants 2025: How Vision + Audio Powers Next-Gen Copilot Apps

Introduction to Multimodal AI Voice Assistants in 2025

(Vision + Audio + Language Intelligence Explained)

AI voice assistants have evolved rapidly over the last decade—from basic command-based systems to conversational interfaces powered by large language models (LLMs). However, 2025 marks a major turning point with the rise of multimodal AI voice assistants that can simultaneously understand voice, visuals, and contextual data.

Traditional voice assistants relied on a single input channel: audio. This limitation often resulted in misunderstood intent, shallow interactions, and high error rates. In contrast, multimodal AI assistants combine speech, computer vision, and language intelligence, enabling systems to “see,” “hear,” and “reason” like humans.

Across enterprise and consumer markets in the USA, UK, UAE, Singapore, Canada, and Europe, demand for AI copilots is accelerating—driven by productivity needs, operational automation, and the expectation of more natural human–AI interaction. In 2025, multimodal AI is no longer experimental; it is becoming a strategic differentiator.

This guide explains how multimodal AI voice assistants work, why they matter now, and how organizations can build enterprise-ready copilot applications.

What Are Multimodal AI Copilots?

(How Voice, Vision & Context Work Together)

A multimodal AI copilot is an intelligent assistant capable of processing and reasoning across multiple data modalities—most commonly voice (audio), vision (images/video), and language (text/context)—within a single interaction flow.

How Multimodal AI Works in Practice

Instead of treating voice and vision as separate systems, multimodal copilots fuse inputs at the model and orchestration level:

Audio: User speech, tone, and intent

Vision: Images, video streams, UI screens, physical environments

Context: User history, device state, enterprise data, workflows

For example, a user might say:

“What’s wrong with this dashboard?” while pointing a camera at a screen.

A multimodal AI assistant can analyze the visual layout, interpret the question, and respond contextually—something traditional voice assistants cannot do.

This fusion unlocks richer, more accurate interactions and enables copilots to operate in complex, real-world environments.

Key Technologies Powering Multimodal AI Assistants

(LLMs, Speech Recognition, Computer Vision & AI Agents)

Building multimodal AI voice assistants requires a sophisticated technology stack designed for real-time perception, reasoning, and response.

Large Language Models & Multimodal Models

Modern LLMs are now extended with multimodal capabilities, allowing them to process text, images, and audio together. These models form the reasoning core of AI copilots, enabling natural dialogue and decision-making.

Speech Recognition & Voice Synthesis

Speech-to-Text (STT): Converts spoken language into accurate text

Text-to-Speech (TTS): Generates natural, expressive voice responses

Supports multilingual, accent-aware, and domain-specific speech models

Computer Vision & Image Understanding

Computer vision enables assistants to interpret:

Images and video feeds

Screens, documents, and objects

Gestures, facial cues, and spatial context

This capability is critical for vision and voice AI solutions in enterprise, healthcare, retail, and mobility applications.

AI Agents & Orchestration Layers

AI agents manage tasks, tools, memory, and workflows. Orchestration layers ensure that voice, vision, and language models work together seamlessly—maintaining context across interactions and devices.

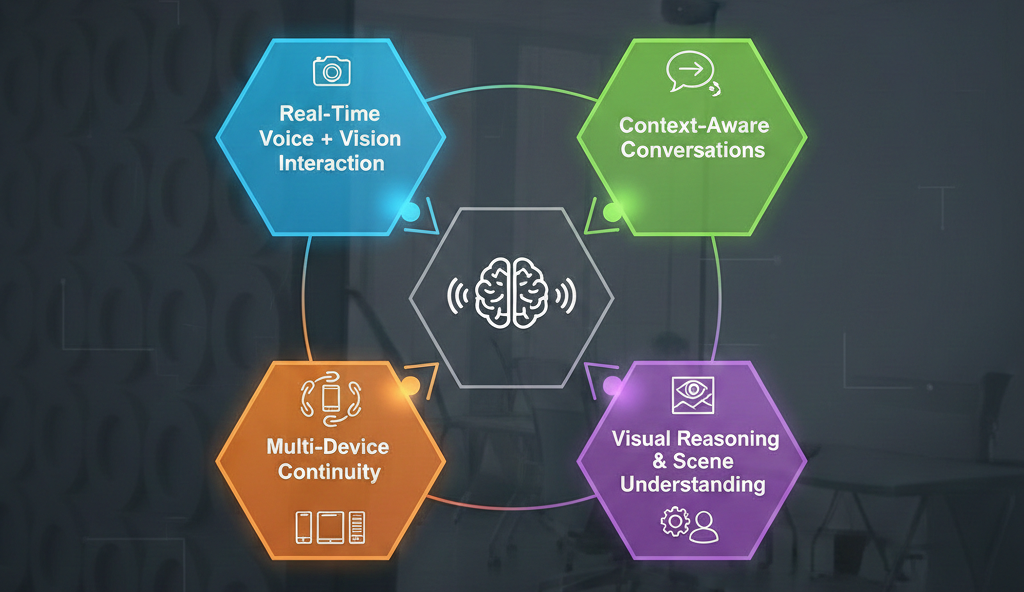

Core Features of Next-Gen AI Voice Assistant Apps

(Real-Time Interaction, Visual Understanding & Context Awareness)

Multimodal AI copilots go far beyond basic Q&A. Key features include:

Top Use Cases of Multimodal AI Copilot Applications

(Enterprise, Healthcare, Retail, Mobility & Smart Devices)

Enterprise Productivity Copilots

In the USA and UK, enterprises are deploying AI copilot app development solutions to:

Analyze dashboards and reports visually

Assist with meetings, workflows, and decision-making

Reduce cognitive load for employees

Healthcare Assistants

Multimodal AI supports:

Clinical documentation using voice + image inputs

Patient guidance with visual explanations

Compliance-aware workflows in regulated environments

Retail & Commerce Assistants

Retailers in Europe, UAE, and Singapore use multimodal AI applications to:

Power smart shopping assistants

Offer visual product discovery and voice-based support

Enhance omnichannel customer experiences

Mobility & Automotive Copilots

In-vehicle assistants leverage vision and speech to:

Interpret road conditions

Assist drivers hands-free

Improve safety and navigation

Smart Home & IoT Ecosystems

Multimodal AI enables assistants to understand environments visually and respond intelligently to voice commands across devices.

Benefits of Multimodal AI Over Traditional Voice Assistants

(Accuracy, Productivity & User Experience)

| Aspect | Traditional Voice AI | Multimodal AI |

|---|---|---|

| Input | Audio only | Voice + Vision + Context |

| Accuracy | Limited | Significantly higher |

| Error Rate | High in complex tasks | Reduced misinterpretation |

| UX | Command-based | Conversational & intuitive |

| Enterprise Value | Low–moderate | High productivity impact |

By combining modalities, multimodal AI voice assistants deliver better intent recognition, fewer hallucinations, and higher user engagement.

Multimodal AI Voice Assistant Development Process

(Architecture, Training & Deployment)

System Architecture & Data Pipelines

Design begins with defining data flows for audio, visual, and contextual inputs—ensuring low latency and high reliability.

Model Selection & Training

Choosing the right combination of LLMs, vision models, and speech engines is critical. Training includes domain adaptation and fine-tuning.

Multimodal Fusion Strategy

Effective copilots rely on intelligent fusion—deciding when and how each modality influences reasoning.

Testing, Deployment & Scaling

Enterprise deployment requires rigorous testing, monitoring, and scalable infrastructure to support global users across regions like Canada, Europe, and the UAE.

Challenges, Privacy & Security Considerations

(Data Protection, Compliance & Ethical AI)

Data Privacy & Consent

Voice and visual data are highly sensitive. Systems must implement strict consent mechanisms and data minimization strategies.

Security of Multimodal Data

Encryption, access controls, and secure storage are essential to protect audio and visual streams.

Regulatory Compliance

Depending on the use case and geography, compliance may include:

GDPR (EU, UK)

HIPAA (healthcare-related use cases)

SOC 2 and enterprise security standards

Ethical AI & Bias Mitigation

Responsible AI practices help reduce bias, ensure transparency, and build user trust.

Market Trends & Future of Multimodal AI Assistants (2025–2027)

(Adoption, Innovation & Business Impact)

Key trends shaping the next phase include:

Rapid enterprise adoption of AI copilots in the USA and Europe

Growth of AI agents with vision and speech for complex workflows

Integration with AR/VR and spatial computing

Increasing focus on compliance-ready, industry-specific AI

Multimodal AI is moving from innovation labs to core enterprise infrastructure.

How RSunBeat Software Builds Intelligent Multimodal AI Copilot Apps

(Custom Development & Enterprise-Ready Solutions)

At RSunBeat Software, we help organizations design and build enterprise-grade multimodal AI voice assistants tailored to real-world business needs.

At a high level, our approach focuses on:

Custom multimodal AI development aligned with business goals

Scalable, cloud-native architectures

Security-first, compliance-aware AI systems

Continuous optimization and long-term support

We work with enterprises across the USA, UK, UAE, Singapore, Canada, and Europe to turn advanced AI capabilities into practical, production-ready copilot applications.

Conclusion

Multimodal AI voice assistants represent the next generation of intelligent systems—capable of understanding the world through vision, voice, and context. In 2025 and beyond, these copilots will redefine how humans interact with software, devices, and enterprises.

Organizations that invest early in multimodal AI applications gain a decisive advantage in productivity, user experience, and innovation. The key is partnering with a development team that understands not just AI models—but enterprise deployment, security, and real-world impact.

If you’re exploring AI copilot app development or evaluating multimodal AI for your business, now is the time to start the conversation.

Frequently Asked Questions (FAQ)

1. What is a multimodal AI voice assistant?

A multimodal AI voice assistant uses voice, text, images, and context together to understand and respond more accurately than traditional voice-only assistants.

2. How is a multimodal AI assistant different from a chatbot?

Chatbots mainly rely on text or voice. Multimodal AI assistants can see images, hear voice commands, and understand context to act like intelligent AI copilots.

3. What technologies power multimodal AI copilot apps?

These apps use large language models (LLMs), speech recognition, computer vision, AI agents, and cloud-based AI infrastructure.

4. What are common use cases of AI voice copilot apps?

Multimodal AI assistants are used in enterprise automation, customer support, healthcare, smart devices, retail, mobility apps, and productivity tools.

5. Are multimodal AI assistants secure for business use?

Yes, when built properly with encrypted data handling, access controls, compliance-ready architecture, and responsible AI practices.

6. How long does it take to develop a multimodal AI assistant?

Development timelines depend on features and integrations, but MVP versions can be launched faster with scalable architecture.

7. Can multimodal AI assistants be customized for enterprises?

Yes, AI copilot apps can be fully customized for business workflows, industry use cases, and internal systems.

Testimonials ~

What Our Clients

Are Saying

Your trust drives our passion. Here’s how we’ve helped businesses like yours thrive with tailored solutions and unmatched support.

Hussein Termos

CEO

“RSunBeat Software is a top-tier web development company for the real estate industry. They delivered a user-friendly, customized platform that met our needs perfectly. Their innovative solutions, on-time delivery, and excellent support made the process seamless. Highly recommended for exceptional development services!”

James Carten

Founder & CEO

“Before working with RSunBeat Software, our real estate business faced issues with unreliable mobile apps, poor user experiences, and missed deadlines. RSunBeat delivered a seamless, high-performing app with excellent functionality, intuitive design, and timely execution, transforming our operations. Their expertise and dedication make them a trusted partner.”

Darla Shewmaker

VP of Marketing

“RSunBeat Software developed a great, user-friendly mobile app that exceeded our expectations. The team was extremely professional, kept us updated throughout the process and completed the work on time. Their expertise and dedication made the whole project seamless. We highly recommend RSunBeat for their great work and reliability!”

Nicholas Toh

Project Manager

“RSunBeat Software delivered an amazing web and app development experience. Their team was highly professional, detail-oriented, and dedicated to bringing our vision to life. The final product was seamless, user-friendly, and exceeded our expectations. We highly recommend them for top-notch development services!”

Our Technology Experts

Are Change Catalysts

Mail to Our Sales Department

info@rsunbeatsoftware.com

Our Skype Id

Rsunbeat Software